In 1965, Gordon Moore defined a relationship between cadence and cost for computing innovation that came to be known as “Moore’s Law.” This rule both described and inspired the exponential growth that built the Information Age.

As Moore predicted, integrated circuits doubled their processing power every two years. Chips got smaller, faster and cheaper. Transistors shrank, and energy requirements dropped. And in line with Moore’s vision, computers transformed our infrastructure, homes and thumbs. Markets acclimatized to this regular pace of progress, and the software industry flourished.

We’ve come to expect rapid improvements in technology. But can Moore’s Law go on forever?

Despite its success, doubts about the future of the Intel founder’s forecast are nothing new. Fears about the limits of fundamental physics have long accompanied the mandate to halve transistor sizes, and fresh difficulties regulating energy costs and heat have headlined the last decade of engineering challenges.

With speed bumps on the path of progress ahead, experts have become at once more contemplative and ambitious about the future of computing technologies.

In this Q&A, Ben Lee, Professor in Computer and Information Science (CIS) and Electrical and Systems Engineering (ESE), and André DeHon, Boileau Professor of Electrical Engineering in CIS and ESE, dig into the stakes of Moore’s Law and reflect on the consequences and opportunities of its possible end.

What do we owe to Moore’s Law?

André DeHon: Moore’s Law has been an engine of innovation and wealth creation. With our ability to regularly double the number of transistors in a circuit, we’ve been able to get creative with what computers can do. And because of Moore’s Law, advancements in technology that begin as high-end applications quickly come down in price and become consumer products. Moore’s Law has made computing ubiquitous, democratized technology and grown our economy.

Ben Lee: And thanks to Moore’s Law, we live in a world built by inexpensive computing deployed at massive scales. On one end, we have data center computing and data center–enabled services. On the other end, we have consumer devices and electronics. And between them, we have an incredibly rich software ecosystem enabled by the fact that computing is so abundant. We’ve been able to rely on a steady supply of chips with a lot of memory, processing power and speed — all with the guarantee that these will continue to increase at a regular pace and an

exponential rate.

When we talk about the end of Moore’s Law, are we talking about the end of this abundance?

Ben Lee: Very possibly. If the underlying hardware becomes less abundant or less capable — if we can’t continue to improve on memory, processing power or speed — that will translate into constraints on what we can build on software. And we’re already running into trouble with scaling. Take augmented reality. If we want to expand what AR can do and Moore’s Law no longer holds, wearable devices risk becoming too bulky and hot, putting an entire form of media at risk of stalling. And think of AI. We’re seeing a lot of data centers and GPUs dedicated to AI applications. If those chips don’t get smaller, more power-efficient or less hot, we’re going to see real sprawl in data center buildings.

André DeHon: The democratization I mentioned earlier would also be at risk. We’re talking about widening gaps between well-resourced entities and everyday people. It will take a lot of money to buy all these chips and build these networks and data centers. Technology will cease to trickle down.

Engineers are experts in navigating constraints. The end of Moore’s Law would expose us to risk, but could that pressure also bring with it opportunities to try new things?

André DeHon: Absolutely. And this sheds light on a drawback of Moore’s Law. Because we’ve had the ability to keep decreasing transistor size to increase computing power, we’ve been throwing transistors at problems that didn’t require them as a solution. It’s a brute force method, and Moore’s Law has incentivized it at the expense of smarter ones like creative architectures and better coordination between software and hardware engineers. There are some exceptions. MP3 design was very clever in its encoding. It gave us digital music around a decade before Moore’s Law would have enabled it.

Ben Lee: And in the early 2000s, scientific computing — high-performance computing — required massive improvements in energy efficiency that Moore’s Law couldn’t have provided. The usual reliance on exponential growth in the number of cores on a chip was a dead end for this kind of application, and so we saw some real creative thinking — a shift from CPUs to GPUs — and renewed interest in custom design, including hardware–software codesign.

Since there’s already a track record of innovation that skirts Moore’s Law, is there a chance that we will continue to see the same kind of progress with a different hardware story?

Ben Lee: It’s possible. I’m not entirely optimistic about cost. These new technologies are going to be expensive, and we don’t yet have clear paths forward for equity of access.

André DeHon: There is, however, a lot to be optimistic about. Moore’s Law has been revolutionary, but it has also bred reliance on crude solutions to problems we could have been solving with creativity and efficiency. The future will be built by computer engineers who can now prioritize this kind of innovation. There’s a lot to catch up on.

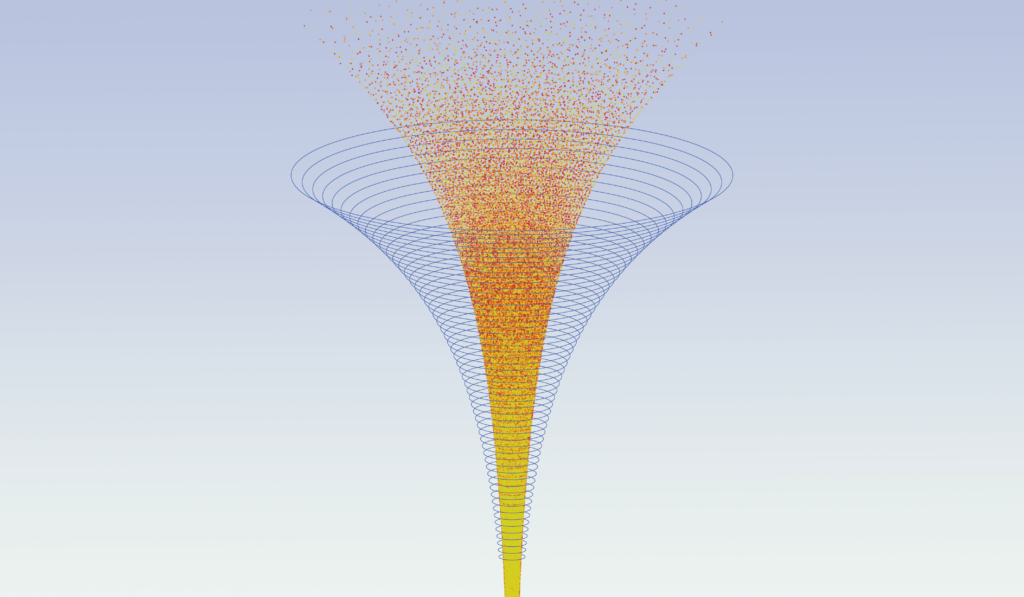

Story by Devorah Fischler / Illustration by Michael Artman